LTER Synthesis Groups use existing data to address fundamental ecological questions—and have generated invaluable cross-ecosystem insight into the natural world. But in such a data heavy process, synthesis groups inevitably spend a disproportionate time wrangling data and writing code before they can test and refine their hypotheses.

Credit: Gabriel De La Rosa, CC BY-SA 4.0

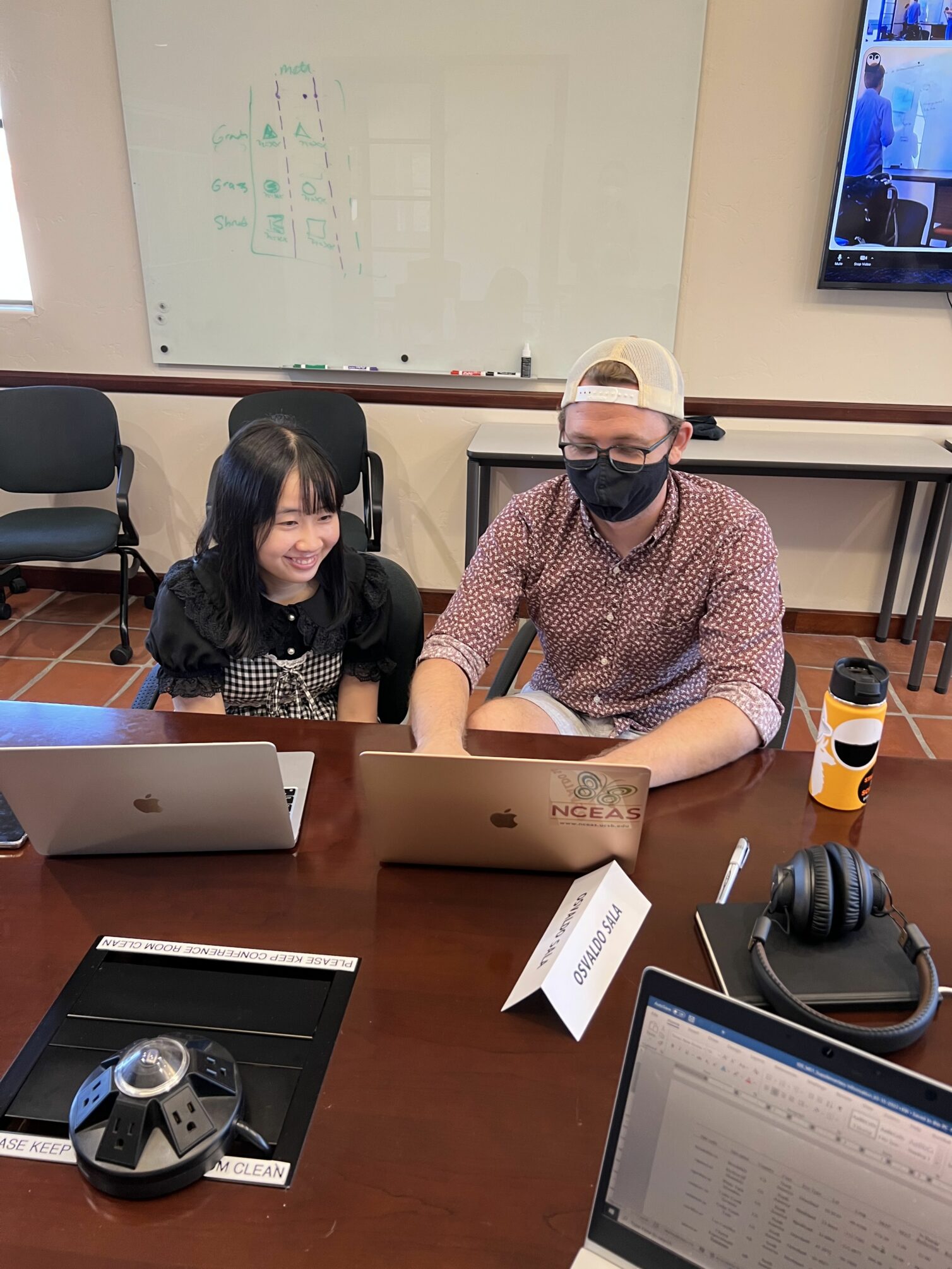

To help working groups focus on the analysis, rather than methodology, the LTER Network Office hired two data analysts, Angel Chen and Nick Lyon, in 2021. They tackle short but critical wrangling tasks during working groups’ in-person meetings, grind away at long-running bits of analysis from afar, and teach critical collaborative and technical skills to facilitate easier collaboration within groups. The result is that working groups spend less time bogged down in technical troubleshooting and more time thinking about, analyzing, and orchestrating novel science.

In the storm

For most groups, Nick and Angel first reveal their utility while sitting in on four day working group meetings at NCEAS, which houses the LTER Network Office.

For example, when the Drought Effects synthesis group met in September 2022, Nick and Angel sat in on every hour of the meeting. “We’re listening, we’re paying attention, and they start asking questions like ‘What would be a good figure to visualize this?’” recounts Nick. “And then Angel or I would chime in and say, ‘oh, well, you know, you could make a violin plot and it would let you see distribution and also sample size,” and suddenly the analysts are put to work.

Credit: Gabriel De La Rosa, CC BY-SA 4.0

A major benefit of having two dedicated analysts on call is that groups can partition them off to complete tasks while continuing to do the conceptual work of synthesis. And while plotting is a relatively light lift, other tasks might require more attention.

For example, when the Plant Reproduction synthesis group realized their compiled dataset was missing a key metric, seed mass for each species, they called on the analysts. “They were like, ‘okay, we have over 100 species and we need to find all of their seed masses’”, says Nick. “And so we found a database that includes this information and we queried it for all of those species.”

By the following afternoon, their dataset had the required information—and the other group members kept cranking through their other work in the meantime. “It made everything so much more efficient to have them there,” says Jalene LaMontagne, lead PI of the Plant Reproduction synthesis group.

And because there are two data analysts onboard, the analysts themselves can split up and partition their time during the meetings. The Plant Reproduction group, for example, broke into several small groups to tackle two aspects of their project during their in-person week in October 2022. While Nick was wrangling the plant data, Angel sat with another group developing a data processing script. “If they ever ran into issues, then I could just be there to help them in real time,” she says.

Through the doldrums

In-person meetings are short, and split by long-periods of remote work for the groups. In these between periods, analysts can tackle daunting analytical tasks. By applying full-time effort, they speed through tasks that might take a group with variable schedules and commitments much longer to complete.

Credit: NCEAS, CC BY-SA 4.0.

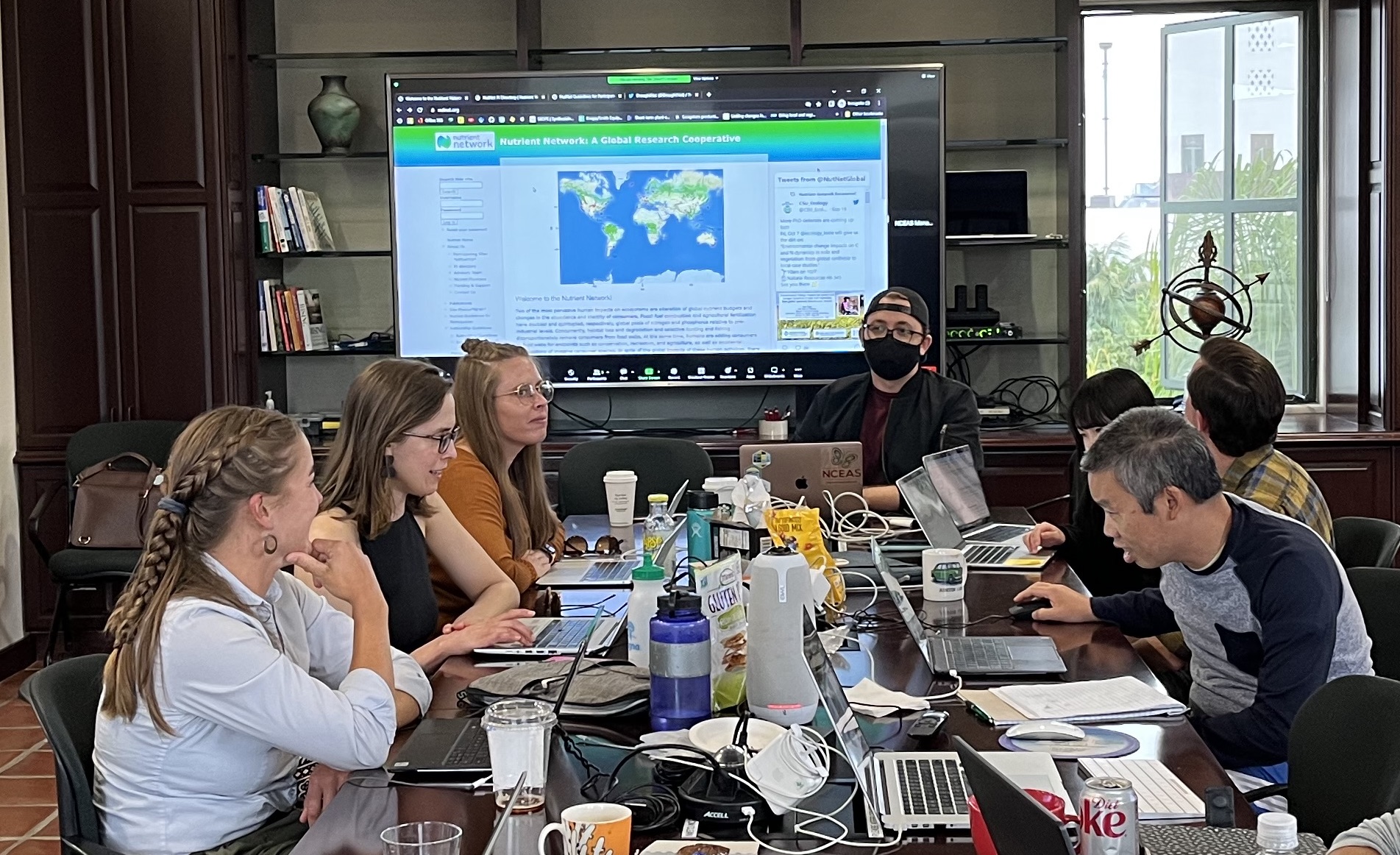

For example, the Silica Synthesis working group had silica export data from rivers around the globe, a huge dataset compiled during their in-person meeting. They needed a way to compare trends between all the rivers across time, a convoluted analysis with quite a few moving parts—but had months before their next in-person meeting.

They put the analysts on the task. After an in-depth meeting that honed in on exactly what kind of analysis the group needed, Nick split off and developed a workflow that achieved their goals over the course of a week. The resulting workflow—a breakpoint analysis with several factors that group members could manipulate—meant that the group could start playing with data, rather than spending time mired in methodological purgatory.

Nick packaged the workflow in a convenient R package. This package, accessible through GitHub, guaranteed that each member of the working group could instantly access the latest version of the script. Then, he created a document walking through the workflow, including instructions on how group members can alter the parameters to suit their own analytical needs.

This is an example of what the analysts call an “analytical sprint,” a two-week period of focused effort towards a working group task. Sprints are an all-hands-on-deck effort to surmount a technical problem that may be preventing groups from making rapid progress. In the Silica Synthesis case, the group let Nick develop a protocol that, when finished, would let them start an in-depth exploration of the actual data. As a result, the group’s brainpower and, importantly, available time, was spent analyzing and understanding the data, rather than writing code.

Teach a researcher to code…

Two analysts can’t do everything, of course, and often working group researchers want or need to dig deep into their own analysis. With a dozen or so members in any given group, synchronized coding practices and reproducible scripts are crucial for efficient work. Yet most researchers never receive formal training on collaborative data analysis, partly because many individual academics rarely develop scripts in large groups.

Data analysts help facilitate better collaborative synthesis by teaching a suite of tools specifically designed to make coding with others easier. These include formal workshops on using GitHub or the Tidyverse R package, which promote easier remote collaboration or common and readable coding syntax, respectively.

Credit: NCEAS, CC BY-SA 4.0

Outside of formal workshops, analysts are always looking for ways that code can be more efficient or more reproducible. And they’re well equipped to do so: as part of the larger data science community at NCEAS, they’re constantly learning new and better ways to code. This is particularly important in today’s rapidly developing software environment.

The analysts tap into the cutting edge of data science in the same way specialized researchers tap into cutting edge conversations in their discipline. “I feel super comfortable just asking people, hey, does anyone know how to do this?,” remarks Angel on the NCEAS data science community. “I could always ask ‘how do I resolve this error?’ Or, ‘is there a more efficient way that this piece of code could be written?’” The resulting knowledge trickles out to working groups—and the final analysis is built to the highest standards of reproducibility.

Little things, big things

Certain groups have started to realize that analysts can be useful in all sorts of ways. For example, the Plant Reproduction group realized that their LTER All Scientists’ Meeting poster should include a key table that they didn’t yet have made. So they called on Nick and Angel, who put together the figure in time for the meeting.

The analysts started holding office hours once a week to give synthesis researchers a chance to request help. Lead PI of the Plant Reproduction group, Jalene, visited the first one, asking for help with a small data summary task. In others, Nick and Angel walked researchers through nuances of GitHub or taught nifty coding functions that avoid convoluted code. These aren’t full blown workshops, but a quick refresher or tutorial to circumvent an impending roadblock.

“Office hours are perfect because the time is already allocated to helping groups,” says Angel. “It doesn’t have to be an email. People can pop into the standing meeting and ask, “Hey, can you help me troubleshoot my code real quick?” So far, each office hours has been well attended—indicating that they’re a much appreciated resource.

Synthesis science: part concept, part mechanics

With five active working groups analyzing everything from silica exports in rivers across the globe to soil metagenomic data, data analysts by nature must perform a wide range of tasks. And, while they don’t have the specific ecological expertise that working group researchers do, they are skilled data scientists, always searching for better, more efficient, and innovative ways of processing data.

“Synthesis science really is defined by data harmonization and data synchronization, data management, quality assurance, quality control type problems,” says Nick. “My goal is to meet all of these data obstacles and then either reduce or eliminate them so that the group can keep moving at full speed.”

“The analogy I’ve settled on for these groups is that they’re architects and we’re carpenters. They’re building a house and they’re like, ‘Oh, we need a super cool custom dining room table with roughly these dimensions,’” reflects Nick. Then, the analysts go build the table. Or write the script.